- 博客/

Kubernetes集群部署

k8s是一个Google开源的容器集群管理平台,如今风靡一时,了解并掌握这门技术变得尤为重要。本文将介绍如何搭建一套简单的k8s集群并部署pod、kubernetes-dashboard。关于k8s架构及其组件的详细概念请自行搜索查阅。

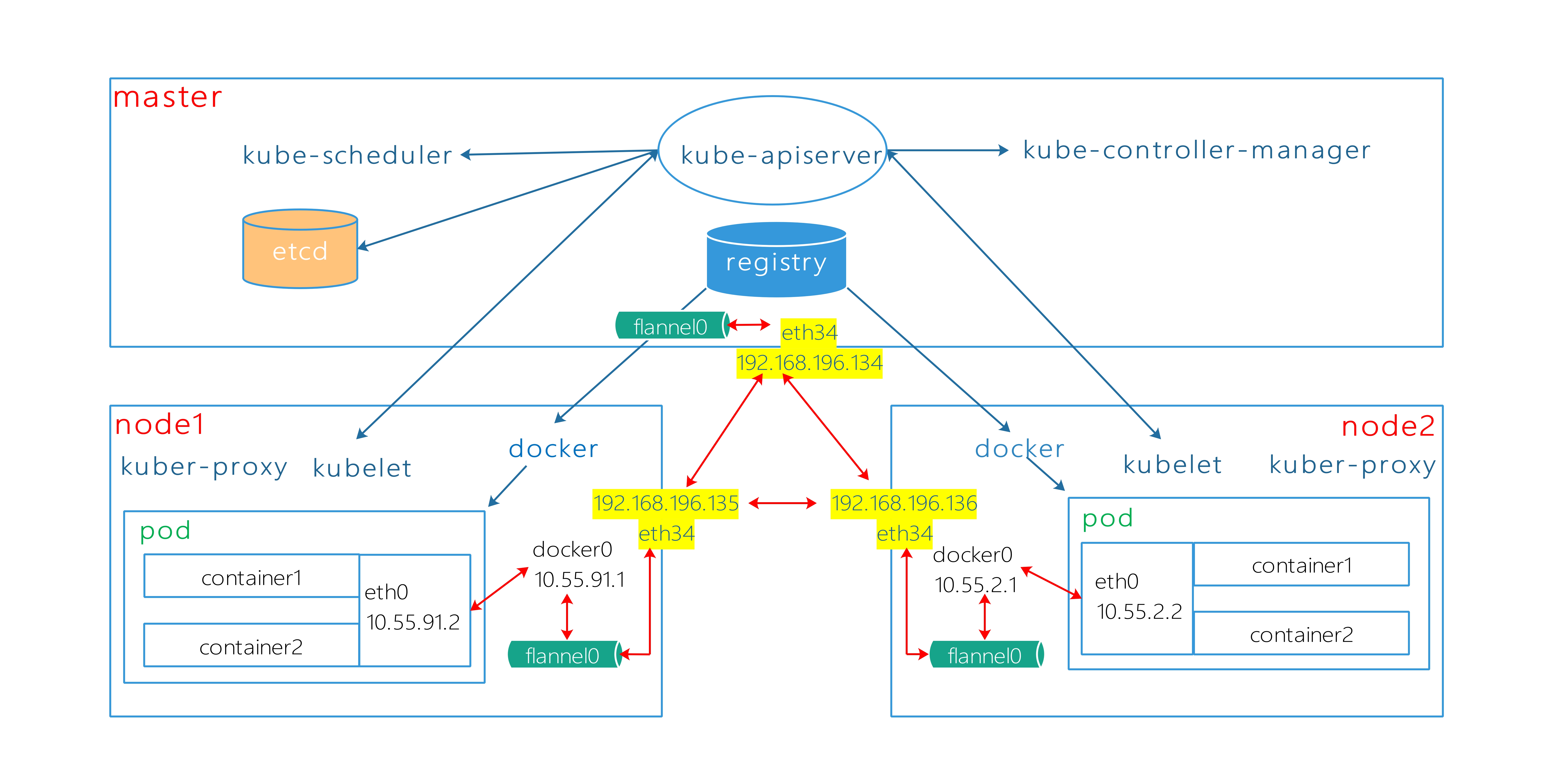

集群节点规划及架构#

| 节点 | IP | service |

|---|---|---|

| master | 192.168.196.134 | etcd,flannel,docker,kubernetes-master,docker-distribution,nginx |

| node1 | 192.168.196.135 | flannel,docker,kubernetes-node |

| node2 | 192.168.196.136 | flannel,docker,kubernetes-node |

对于高可用和容错的Kubernetes生产和部署,需要多个主节点和一个单独的etcd集群

服务安装#

etcd#

所有关于集群状态的配置信息都以key/value对的形式存储在etcd中,这些状态显示了集群中包含的节点和需要在其中运行的pods

本例只是etcd单机部署,在master节点上yum安装etcd并修改etcd.conf,启动etcd

$ grep ^[^#] /etc/etcd/etcd.conf

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_CLIENT_URLS="http://192.168.196.134:2379"

ETCD_NAME=etcd1

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.196.134:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.196.134:2379"

ETCD_INITIAL_CLUSTER="etcd1=http://192.168.196.134:2380"

$ ss -ntl|egrep "2379|2380"

LISTEN 0 128 192.168.196.134:2379 *:*

LISTEN 0 128 127.0.0.1:2380 *:*

#etcdctl简单命令

$ etcdctl --endpoints http://192.168.196.134:2379 mkdir /testdir

$ etcdctl --endpoints http://192.168.196.134:2379 ls /testdir

$ etcdctl --endpoints http://192.168.196.134:2379 ls /

/testdir

$ etcdctl --endpoints http://192.168.196.134:2379 mk /testdir/testkey testvalue

testvalue

$ etcdctl --endpoints http://192.168.196.134:2379 get /testdir/testkey

testvalue

$ etcdctl --endpoints http://192.168.196.134:2379 rm /testdir/testkey

PrevNode.Value: testvalue

$ etcdctl --endpoints http://192.168.196.134:2379 rmdir /testdir

flannel#

overlay网络解决方案,实现pod到pod之间的网络通信,并为每个pod提供唯一的IP地址

- 各节点yum安装flannel,修改

/etc/sysconfig/flanneld

$ grep ^[^#] /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://192.168.196.134:2379"

FLANNEL_ETCD_PREFIX="/atomic.io/network" <--定义在etcd中存储的目录

FLANNEL_OPTIONS="-iface=ens34 -ip-masq=true"

- 在etcd中手动创建自定义flannel网段的地址池

10.55.0/16

$ etcdctl --endpoints http://192.168.196.134:2379 mkdir /atomic.io

$ etcdctl --endpoints http://192.168.196.134:2379 mkdir /atomic.io/network

$ etcdctl --endpoints http://192.168.196.134:2379 mk /atomic.io/network/config '{"Network": "10.55.0.0/16"}'

{"Network": "10.55.0.0/16"}

- 各节点启动flanneld服务,可查看flannel0桥随机分配的地址

# master节点

$ ifconfig flannel0 | grep -A1 flannel0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.55.68.0 netmask 255.255.0.0 destination 10.55.68.0

# flannel配置信息存在subnet.env文件

$ cat /var/run/flannel/subnet.env

FLANNEL_NETWORK=10.55.0.0/16

FLANNEL_SUBNET=10.55.68.1/24

FLANNEL_MTU=1472

FLANNEL_IPMASQ=true

# node1节点

$ ifconfig flannel0 | grep -A1 flannel0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.55.91.0 netmask 255.255.0.0 destination 10.55.91.0

# node2节点

$ ifconfig flannel0 | grep -A1 flannel

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.55.2.0 netmask 255.255.0.0 destination 10.55.2.0

registry#

为集群搭建私有镜像仓库,可以从dockerHub上拉取镜像启动,也可以yum安装docker-distribution和nginx进行搭建,本文使用后一种方法

- 在master节点上,yum安装docker-distribution并修改

config.yml,启动服务

$ cat /etc/docker-distribution/registry/config.yml

version: 0.1

log:

fields:

service: registry

storage:

cache:

layerinfo: inmemory

filesystem:

rootdirectory: /var/lib/registry <--存储路径

http:

addr: 127.0.0.1:5000 <--监听地址和端口

- 在master节点,yum安装nginx,新建一个代理到后端registry的nginx配置文件

registry.conf,启动服务

$ cat /etc/nginx/conf.d/registry.conf

server {

listen 8088;

server_name registry;

client_max_body_size 0;

location / {

proxy_pass http://127.0.0.1:5000;

proxy_next_upstream error timeout invalid_header http_500 http_502 http_503 http_504;

proxy_redirect off;

proxy_buffering off;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

# 8080和5000端口已监听

$ netstat -ntl

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 192.168.196.134:2379 0.0.0.0:* LISTEN

tcp 0 0 127.0.0.1:2380 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:8088 0.0.0.0:* LISTEN

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN

tcp 0 0 127.0.0.1:5000 0.0.0.0:* LISTEN

tcp6 0 0 :::80 :::* LISTEN

tcp6 0 0 :::22 :::* LISTEN

tcp6 0 0 ::1:25 :::* LISTEN

- 修改各节点

/etc/hosts文件,配置registry解析,以master节点为例

$ cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.196.134 registry

192.168.196.135 node1

192.168.196.136 node2

# 验证镜像仓库服务是否正常

$ curl -Lv registry:8088/v2

* About to connect() to registry port 8088 (#0)

* Trying 192.168.196.134...

* Connected to registry (192.168.196.134) port 8088 (#0)

> GET /v2 HTTP/1.1

> User-Agent: curl/7.29.0

> Host: registry:8088

> Accept: */*

>

< HTTP/1.1 301 Moved Permanently

< Server: nginx/1.12.2

< Date: Tue, 03 Jul 2018 14:45:49 GMT

< Content-Type: text/html; charset=utf-8

< Content-Length: 39

< Connection: keep-alive

< Docker-Distribution-Api-Version: registry/2.0

< Location: /v2/

<

* Ignoring the response-body

* Connection #0 to host registry left intact

* Issue another request to this URL: 'HTTP://registry:8088/v2/'

* Found bundle for host registry: 0x78fee0

* Re-using existing connection! (#0) with host registry

* Connected to registry (192.168.196.134) port 8088 (#0)

> GET /v2/ HTTP/1.1

> User-Agent: curl/7.29.0

> Host: registry:8088

> Accept: */*

>

< HTTP/1.1 200 OK

< Server: nginx/1.12.2

< Date: Tue, 03 Jul 2018 14:45:49 GMT

< Content-Type: application/json; charset=utf-8

< Content-Length: 2

< Connection: keep-alive

< Docker-Distribution-Api-Version: registry/2.0

<

* Connection #0 to host registry left intact

{}

docker#

1.master节点yum安装 docker-engine-1.13.1-1

- 修改/etc/docker/daemon.json

$ cat /etc/docker/daemon.json

{

"storage-driver": "devicemapper",

"storage-opts": [

"dm.thinpooldev=/dev/mapper/docker--vg-thinpool",

"dm.use_deferred_removal=true",

"dm.use_deferred_deletion=true"

],

"graph": "/docker",

"insecure-registries": ["registry:8088"] <--配置镜像仓库地址

}

- 修改/usr/lib/systemd/system/docker.service

- 加入

$DOCKER_NETWORK_OPTIONS启动参数,否则docker无法根据flannel服务随机分配的网段配置docker0网桥 - [service]下添加ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT;否则,docker启动后FORWARD链的默认策略为DROP

- 加入

$vi /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

#flannel服务启动后会自动生成/var/run/flannel/docker文件,可用于指定docker的相关网络参数

$ cat /var/run/flannel/docker

DOCKER_OPT_BIP="--bip=10.55.68.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1472"

DOCKER_NETWORK_OPTIONS=" --bip=10.55.68.1/24 --ip-masq=false --mtu=1472"

- 启动docker

$ systemctl daemon-reload

$ systemctl start docker

$ ifconfig|grep -A1 "docker0\|flannel0"

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 10.55.68.1 netmask 255.255.255.0 broadcast 0.0.0.0

--

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.55.68.0 netmask 255.255.0.0 destination 10.55.68.0

$ docker info | grep -A2 Insecure

Insecure Registries:

registry:8088

127.0.0.0/8

2.node1节点和node2节点yum安装docker-1.13.1

- 添加镜像仓库地址,修改配置文件

/etc/sysconfig/docker

$ grep ^[^#] /etc/sysconfig/docker

OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false -g /docker'

if [ -z "${DOCKER_CERT_PATH}" ]; then

DOCKER_CERT_PATH=/etc/docker

fi

ADD_REGISTRY="--add-registry registry"

INSECURE_REGISTRY="--insecure-registry registry:8088"

修改/usr/lib/systemd/system/docker.service

[service]下添加ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT;否则,docker启动后FORWARD链的默认策略为DROP

启动docker服务

$ systemctl daemon-reload

$ systemctl start docker

$ ifconfig |grep -A1 "docker0\|flannel"

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 10.55.2.1 netmask 255.255.255.0 broadcast 0.0.0.0

--

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.55.2.0 netmask 255.255.0.0 destination 10.55.2.0

$ docker info|grep -A2 Insecure

Insecure Registries:

registry:8088

127.0.0.0/8

kubernetes-master#

- master节点yum安装kubernetes-master,修改/etc/kubernetes/下的配置文件

$ls /etc/kubernetes/

apiserver config controller-manager scheduler

#config中的配置为apiserver、controller-manager及scheduler的通用配置项

$ grep ^[^#] /etc/kubernetes/config

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_MASTER="--master=http://192.168.196.134:8080" <--指定apiserver地址及端口

$ grep ^[^#] /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.196.134:2379" <--etcd服务地址

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" <--k8s集群的service网段

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

KUBE_API_ARGS=""

master节点上启动kube-apiserver、kube-scheduler、kube-controller-manager服务

2.1 Kubernetes API Server: 通过kube-apiserver进程提供服务;用户通过Rest操作或kubectl cli与API Server交互;它用于所有与API对象(Pod、RC、Service等)相关的操作(如增、删、改、查及watch等),是集群内各功能模块之间数据交互和通信的中心枢纽。 2.2 Controller Manager: 集群内部的管理控制中心,负责集群内的Node、Pod副本、服务端点(Endpoint)、命名空间(Namespace)、服务账号(serviceAccount)、资源定额(ResourceQuota)等的管理,提供自愈功能,确保集群始终处于预期的工作状态 2.3 Scheduler: 负责Pod的调度,将待调度的Pod(API新创建的Pod、Controller Manager为补足副本而创建的Pod等)按照特定的调度算法和调度策略绑定到集群中某个node上,并将绑定信息写入etcd

$ systemctl start kube-apiserver.service kube-scheduler.service kube-controller-manager.service

# 使用命令行工具测试

$ kubectl -s 192.168.196.134:8080 --version

Kubernetes v1.5.2

kubernetes-node#

- node1/node2节点yum安装kubernetes-node,修改/etc/kubernetes/下的配置文件

#config中的配置为kubelet及kube-proxy的通用配置项

$ grep ^[^#] /etc/kubernetes/config

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_MASTER="--master=lhttp://192.168.196.134:8080"

$ grep ^[^#] /etc/kubernetes/kubelet

KUBELET_ADDRESS="--address=0.0.0.0"

KUBELET_HOSTNAME="--hostname-override=node1" <--注意node1/node2的区别

KUBELET_API_SERVER="--api-servers=http://192.168.196.134:8080"

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" <--随pod一起启动的容器的镜像

KUBELET_ARGS=""

在node节点上启动kubelet、kube-proxy

2.1 kubelet: 处理Master节点下发到本节点的任务,管理Pod及Pod中的容器(通过API Server监听Scheduler产生的Pod绑定event);每个kubelet进程会在API Server上注册节点自身信息,定期向Master节点汇报节点资源的使用情况,并通过cAdvisor监控容器和节点资源。 2.2 kube-proxy: kube-proxy服务进程,可以看做是service的透明代理兼负载均衡,其核心功能是将到某个service的访问请求转发到后端的多个Pod实例上,事实上是通过iptables的NAT转换来实现的。Service是对一组Pod的抽象,创建时可被分配一个虚拟的Cluster IP。

$ systemctl start kubelet.service kube-proxy.service

# 使用命令行工具测试

$ kubectl -s 192.168.196.134:8080 get node

NAME STATUS AGE

node1 Ready 2m

node2 Ready 2m

部署示例#

1.查看当前版本的kubernetes服务支持的API类型及版本

$ curl 192.168.196.134:8080

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/apps",

"/apis/apps/v1beta1",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v2alpha1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1alpha1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/policy",

"/apis/policy/v1beta1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1alpha1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/ping",

"/healthz/poststarthook/bootstrap-controller",

"/healthz/poststarthook/extensions/third-party-resources",

"/healthz/poststarthook/rbac/bootstrap-roles",

"/logs",

"/metrics",

"/swaggerapi/",

"/ui/",

"/version"

]

}

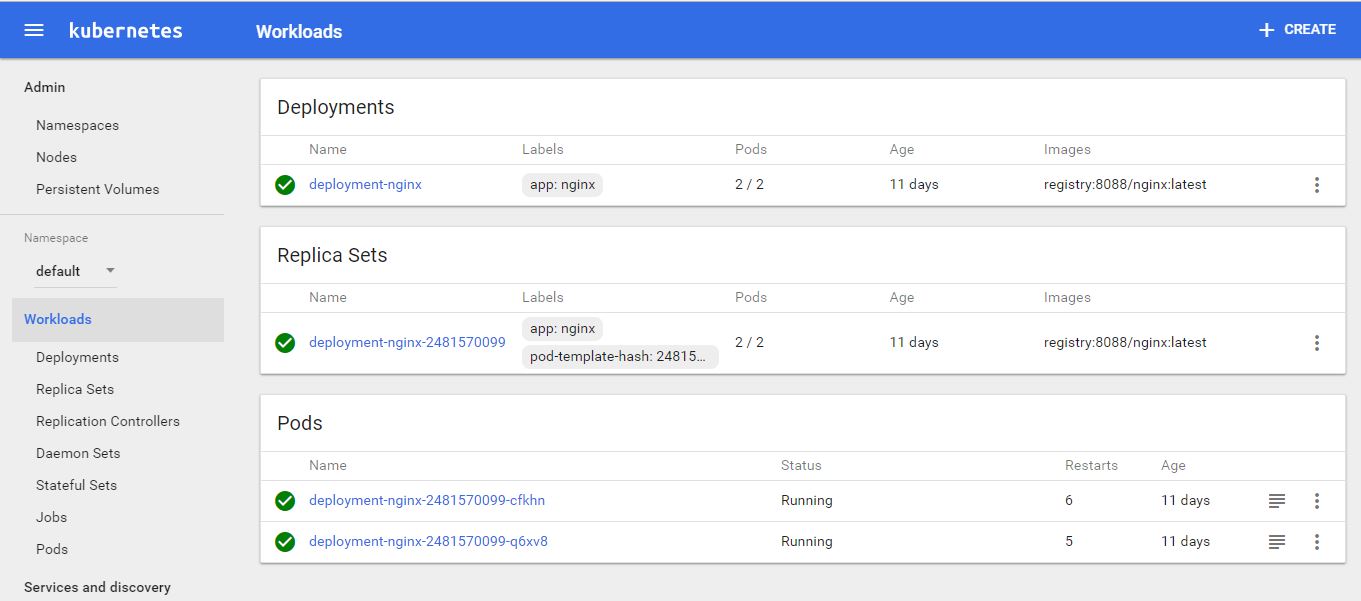

2.部署一个nginx服务的deployment示例

$ cat deployment-nginx.yml

apiVersion: extensions/v1beta1 <--指定APIversion

kind: Deployment <--指定资源类型为deployment

metadata:

name: deployment-nginx <--deployment的名称

spec:

replicas: 2 <--期望pod的副本数

revisionHistoryLimit: 10

template:

metadata:

labels: <--pod标签

app: nginx

spec:

containers:

- name: nginx

image: registry:8088/nginx:latest

ports:

- containerPort: 80

$ kubectl -s 192.168.196.134:8080 create -f deployment-nginx.yml

查看deployment信息

get查询某个resource的详细信息,describe查询某个resource相关的状态信息

$ kubectl -s 192.168.196.134:8080 get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment-nginx 2 2 2 2 7d

$ kubectl -s 192.168.196.134:8080 describe deployment

Name: deployment-nginx

Namespace: default

CreationTimestamp: Fri, 27 Jul 2018 13:37:02 +0800

Labels: app=nginx

Selector: app=nginx

Replicas: 2 updated | 2 total | 2 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 1 max unavailable, 1 max surge

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

OldReplicaSets: <none>

NewReplicaSet: deployment-nginx-2481570099 (2/2 replicas created)

No events.

$ kubectl -s 192.168.196.134:8080 get deployment -o yaml <--支持导出为json和yaml格式

apiVersion: v1

items:

- apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

creationTimestamp: 2018-07-27T05:37:02Z

generation: 1

labels:

app: nginx

name: deployment-nginx

namespace: default

resourceVersion: "88336"

selfLink: /apis/extensions/v1beta1/namespaces/default/deployments/deployment-nginx

uid: 16ed87a7-915f-11e8-a083-000c29815d48

spec:

replicas: 2

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

containers:

- image: registry:8088/nginx:latest

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 80

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

dnsPolicy: ClusterFirst

restartPolicy: Always

securityContext: {}

terminationGracePeriodSeconds: 30

status:

availableReplicas: 2

conditions:

- lastTransitionTime: 2018-07-30T05:36:05Z

lastUpdateTime: 2018-07-30T05:36:05Z

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

observedGeneration: 1

replicas: 2

updatedReplicas: 2

kind: List

metadata: {}

resourceVersion: ""

selfLink: ""

- 查看pod的信息

# pod所部署的节点及分配的IP

$ kubectl -s 192.168.196.134:8080 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

deployment-nginx-2481570099-cfkhn 1/1 Running 4 7d 10.55.2.2 node2

deployment-nginx-2481570099-q6xv8 1/1 Running 3 7d 10.55.91.2 node1

#除了通过get/describe查询pod的全部信息,还可以通过template抓取指定key的值

$ kubectl -s 192.168.196.134:8080 get pod -o template \

deployment-nginx-2481570099-cfkhn --template={{.status.podIP}}

10.55.2.2

- 验证flannel网络下pod的连通性

#登录到node1上

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

17485262b0a6 registry:8088/nginx:latest "nginx -g 'daemon ..." 5 hours ago Up 5 hours k8s_nginx.4f504e9_deployment-nginx-2481570099-q6xv8_default_16f355db-915f-11e8-a083-000c29815d48_e2f3c24d

dc1833e50415 registry:8088/pod-infrastructure:v3.4 "/pod" 5 hours ago Up 5 hours k8s_POD.ee70020d_deployment-nginx-2481570099-q6xv8_default_16f355db-915f-11e8-a083-000c29815d48_7c54ed33

#访问本节点上的pod服务

$ curl 10.55.91.2:80

Welcome to nginx!

# 访问node2节点上的pod服务

$ curl 10.55.2.2:80

Welcome to nginx!

#登录到node2上

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c087c12e8afd registry:8088/nginx:latest "nginx -g 'daemon off" 5 hours ago Up 5 hours k8s_nginx.4f504e9_deployment-nginx-2481570099-cfkhn_default_16f36465-915f-11e8-a083-000c29815d48_80f894f4

195f5d3de8c1 registry:8088/pod-infrastructure:v3.4 "/pod" 5 hours ago Up 5 hours k8s_POD.ee70020d_deployment-nginx-2481570099-cfkhn_default_16f36465-915f-11e8-a083-000c29815d48_e4b4e2e1

#访问本节点上的pod服务

$ curl 10.55.2.2:80

Welcome to nginx!

# 访问node2节点上的pod服务

$ curl 10.55.91.2:80

Welcome to nginx!

#同样的master节点也配置flanne网络,可访问pod的应用

3.部署service示例

#为部署的pod创建service

$ cat service-nginx.yml

kind: Service

apiVersion: v1

metadata:

name: service-nginx

spec:

ports:

- port: 80 <-- service的访问端口

targetPort: 80 <-- nginx容器服务端口

selector:

app: nginx <-- 以pod标签识别要代理的后端应用

type: NodePort <-- 负载均衡为轮询

#NodePort模式为 绑定到pod所在Node节点的网卡,对外提供服务,端口为NodePort(不指定时随机分配)

$ kubectl -s 192.168.196.134:8080 create -f service-nginx.yml

#查看service-nginx的详细信息

$ kubectl -s 192.168.196.134:8080 get service -o wide service-nginx

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service-nginx 10.254.104.158 <nodes> 80:30284/TCP 7d app=nginx

$ kubectl -s 192.168.196.134:8080 get service -o yaml service-nginx

apiVersion: v1

kind: Service

metadata:

creationTimestamp: 2018-07-27T07:01:04Z

name: service-nginx

namespace: default

resourceVersion: "17086"

selfLink: /api/v1/namespaces/default/services/service-nginx

uid: d437faec-916a-11e8-a083-000c29815d48

spec:

clusterIP: 10.254.104.158 <-- 为service分配的随机clusterIP

ports:

- nodePort: 30284 <--绑定到node节点物理网卡的随机端口

port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

sessionAffinity: None <-- 1)

type: NodePort

status:

loadBalancer: {}

1)默认负载策略为轮询,可指定sessionAffinity的值为clientIP实现session绑定

$ kubectl -s 192.168.196.134:8080 describe service service-nginx

Name: service-nginx

Namespace: default

Labels: <none>

Selector: app=nginx

Type: NodePort

IP: 10.254.104.158

Port: <unset> 80/TCP

NodePort: <unset> 30284/TCP

Endpoints: 10.55.2.2:80,10.55.91.2:80

Session Affinity: None

No events.

#在任意node节点上访问service-nginx绑定的ClusterIP:Port

$ curl 10.254.104.158:80

Welcome to nginx!

#通过监测两个node的nginx访问日志可以看出是转发策略为轮询

#在任意节点上访问service-naginx绑定在主机网卡上的NodePort

[root@master ~]# curl 192.168.196.136:30284

Welcome to nginx!

[root@master ~]# curl 192.168.196.135:30284

Welcome to nginx!

[root@node1 ~]# curl 192.168.196.135:30284

Welcome to nginx!

[root@node1 ~]# curl 192.168.196.136:30284

Welcome to nginx!

[root@node2 ~]# curl 192.168.196.136:30284

Welcome to nginx!

[root@node2 ~]# curl 192.168.196.135:30284

Welcome to nginx!

4.部署kubernetes dashboard github上项目地址https://github.com/kubernetes/dashboard

- 由于安装的k8s服务版本为v1.5.2,这里选择dashboard release版本为v1.5.0的镜像,dockerhub上搜索并pull到本地( https://hub.docker.com/r/ist0ne/kubernetes-dashboard-amd64/ ),打标签推到私有仓库

- 获取github上dashboard的部署文件

$ wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.5.0/src/deploy/kubernetes-dashboard.yaml

修改kubernetes-dashboard.yaml文件中

- image: registry:8088/kubernetes-dashboard-amd64:v1.5.0

- –apiserver-host=http://192.168.196.134:8080

部署dashboard

$ kubectl -s 192.168.196.134:8080 create -f kubernetes-dashboard.yaml

deployment "kubernetes-dashboard" created

service "kubernetes-dashboard" created

这里要注意,kubernetes-dashboard部署的namespace为kube-system而不是default,需在命令行指定 -n kube-system或者–namespace=kube-system才能获取到对应的资源信息

- 浏览器访问192.168.196.134:8080/ui