- 博客/

高可用prometheus监控集群搭建(一)

高可用prometheus监控集群部署 - This article is part of a series.

简介#

Prometheus是一个开源的监控系统,通过抓取或拉取应用程序中暴露的时间序列数据来工作。时间序列数据通常由应用程序本身通过客户端库或者成为exporter的代理来作为HTTP端点暴露。目前已经存在很多exporter和客户端库,支持多种编程语言、框架和开源应用程序。

官方架构图#

- Prometheus通过配置target,来抓取对应主机、进程、服务或应用程序的指标。一组具有相同角色的target被称为job。比如,定义kubernetes-nodes的job,来抓取集群所有主机的相关指标

- 服务发现(target)

- 静态资源列表

- 基于文件的发现,可使用自动化配置管理工具自动更新

- 自动发现,支持基于AWS/Consul/dns/kubernetes/marathon等的服务发现

- Prometheus将收集时间序列数据存储在本地,也可以配置remote_write存储到其它时间序列数据库,比如OpenTSDB、InfluxDB、M3DB等

- 可视化: Prometheus内置了web UI,也可以和功能强大的开源仪表板Grafana集成,可自定义dashboard满足各种定制化需求

- 聚合和告警: Prometheus可以查询和聚合时间序列数据,并创建规则来记录常用的查询和聚合。还可以定义警报规则,满足条件时触发警报,并推送到Alertmanager服务。Alertmanagr支持高可用的集群部署,负责管理、整合和分发警报到不通目的地,并能做到告警收敛

数据模型#

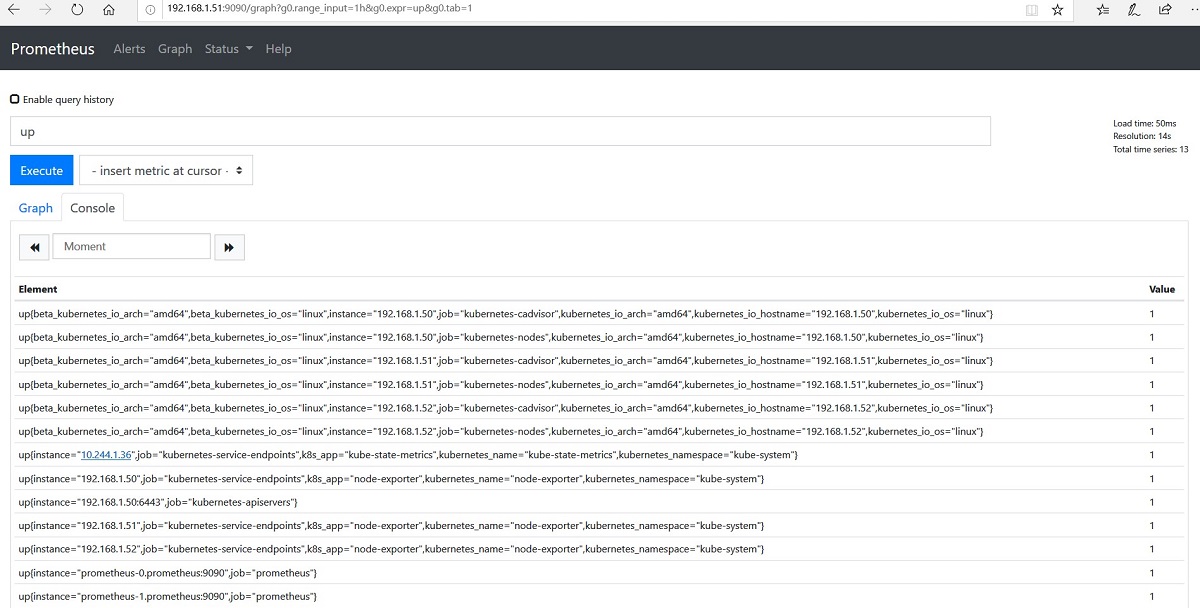

Prometheus使用一个多维时间序列数据模型,结合了时间序列名称和称为标签(label)的键/值对,这些标签提供了维度。每个时间序列由时间序列名称和标签的组合唯一标识。

<time series name> {<label name>=<label value>,...}

machine_cpu_cores{beta_kubernetes_io_arch=“amd64”,beta_kubernetes_io_os=“linux”,instance=“192.168.1.51”,job=“kubernetes-cadvisor”,kubernetes_io_arch=“amd64”,kubernetes_io_hostname=“192.168.1.51”,kubernetes_io_os=“linux”} 2 machine_cpu_cores收集的是主机的cpu核数,taget label标识了是job kubernetes-cadvisor抓取的,instance 192.168.1.51的指标,还包括此node的其它labels;获取到的value值为2

集群拓扑图#

节点部署规划#

| IP | k8srole | service |

|---|---|---|

| 192.168.1.50 | master | node-exporter/alertmanager |

| 192.168.1.51 | node | node-exporter/alertmanager/grafana/prometheus-0/prometheus-federate-0/kube-state-metrics |

| 192.168.1.52 | node | node-exporter/alertmanager/prometheus-1/prometheus-federate-1 |

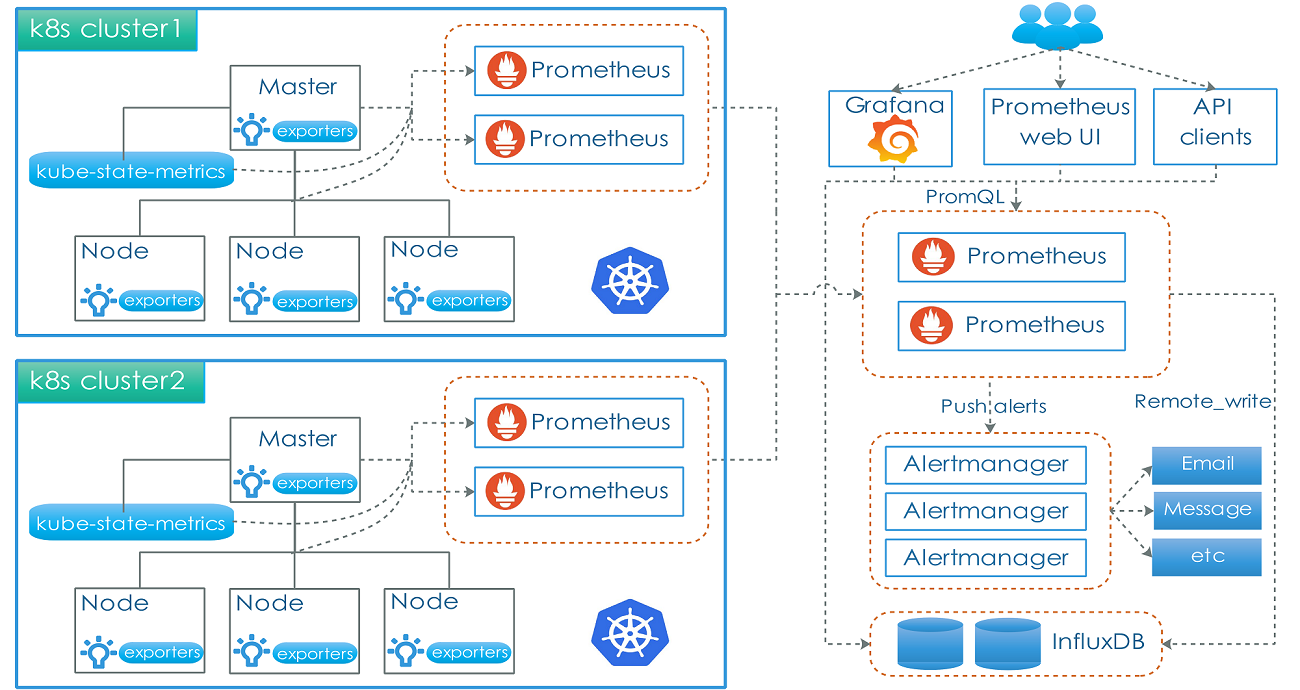

部署架构图#

在多k8s集群模式下,每个集群部署prometheus server用于收集该集群相关指标,借助prometheus联邦模式,实现监控数据的统一收集展现及告警通知

集群内prometheus server部署#

statefulset方式部署,并使用本地持久卷 Local Volume

创建prometheus server数据目录#

$ groupadd -g 65534 nfsnobody

$ useradd -g 65534 -u 65534 -s /sbin/nologin nfsnobody

$ chown nfsnobody. /data/prometheus/

创建storageclass,指定卷挂载为拓扑感知模式,即pod调度后再绑定卷#

$cat storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: prometheus-lpv

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

创建两个local volume,利用nodeAffinity绑定到指定节点#

# cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-lpv-0

spec:

capacity:

storage: 50Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: prometheus-lpv

local:

path: /data/prometheus

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- 192.168.1.51

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-lpv-1

spec:

capacity:

storage: 50Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: prometheus-lpv

local:

path: /data/prometheus

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- 192.168.1.52

初始化rabc#

$cat rbac-setup.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

###### 创建configmap(prometheus.yml)

$cat prometheus-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 30s

evaluation_interval: 30s

external_labels:

cluster: "01"

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets:

- prometheus-0.prometheus:9090

- prometheus-1.prometheus:9090

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

metric_relabel_configs:

- action: replace

source_labels: [id]

regex: '^/machine\.slice/machine-rkt\\x2d([^\\]+)\\.+/([^/]+)\.service$'

target_label: rkt_container_name

replacement: '${2}-${1}'

- action: replace

source_labels: [id]

regex: '^/system\.slice/(.+)\.service$'

target_label: systemd_service_name

replacement: '${1}'

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- source_labels: [__address__]

action: replace

target_label: instance

regex: (.+):(.+)

replacement: $1

statefulset创建后,pod的命名方式为<statefulset name>-0、<statefulset name>-1… 对应生成的dns域名为,podname.stateflusetname.namespace.svc.cluster.local

jobprometheus用来获取prometheus本身的监控指标,本例中prometheus server为statefulset方式部署,指定targets为prometheus-0.prometheus:9090、prometheus-1.prometheus:9090

external_labels用于外部系统标签,在使用remote_write或者federate时,可以为该集群指标添加标签

创建headless service#

$cat service-statefulset.yaml

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: kube-system

spec:

ports:

- name: prometheus

port: 9090

targetPort: 9090

selector:

k8s-app: prometheus

clusterIP: None

创建prometheus statefulset#

$cat prometheus-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: prometheus

namespace: kube-system

labels:

k8s-app: prometheus

kubernetes.io/cluster-service: "true"

spec:

serviceName: "prometheus"

podManagementPolicy: "Parallel"

replicas: 2

selector:

matchLabels:

k8s-app: prometheus

template:

metadata:

labels:

k8s-app: prometheus

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values:

- prometheus

topologyKey: "kubernetes.io/hostname"

priorityClassName: system-cluster-critical

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: prometheus-server-configmap-reload

image: "jimmidyson/configmap-reload:v0.1"

imagePullPolicy: "IfNotPresent"

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9090/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

- image: prom/prometheus:v2.11.0

imagePullPolicy: IfNotPresent

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=24h"

- "--web.console.libraries=/etc/prometheus/console_libraries"

- "--web.console.templates=/etc/prometheus/consoles"

- "--web.enable-lifecycle"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/prometheus"

name: prometheus-data

- mountPath: "/etc/prometheus"

name: config-volume

readinessProbe:

httpGet:

path: /-/ready

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

livenessProbe:

httpGet:

path: /-/healthy

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 1000m

memory: 2500Mi

serviceAccountName: prometheus

volumes:

- name: config-volume

configMap:

name: prometheus-config

volumeClaimTemplates:

- metadata:

name: prometheus-data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "prometheus-lpv"

resources:

requests:

storage: 20Gi

volumeClaimTemplates指定storageclass,在pod创建时会生成PVC,为pod分配prometheus-lpv的pv。只要PVC不被删除,pod均会被调度到已绑定的pv节点上。

$ kubectl get pv

prometheus-lpv-0 50Gi RWO Retain Bound kube-system/prometheus-data-prometheus-1 prometheus-lpv 13d

prometheus-lpv-1 50Gi RWO Retain Bound kube-system/prometheus-data-prometheus-0 prometheus-lpv 13d

$ kubectl get pvc -n kube-system

prometheus-data-prometheus-0 Bound prometheus-lpv-1 50Gi RWO prometheus-lpv 13d

prometheus-data-prometheus-1 Bound prometheus-lpv-0 50Gi RWO prometheus-lpv 13d

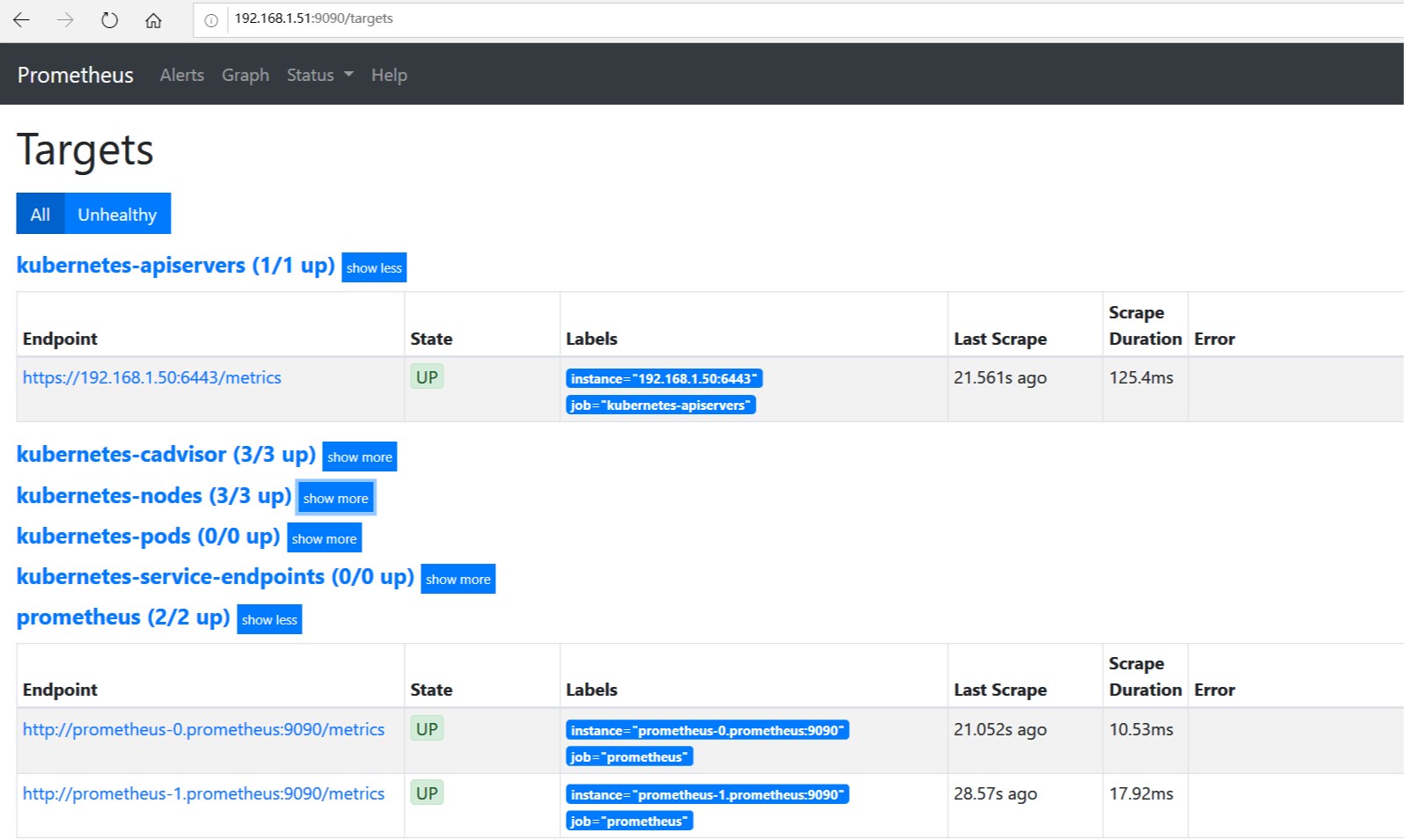

访问prometheus web UI#

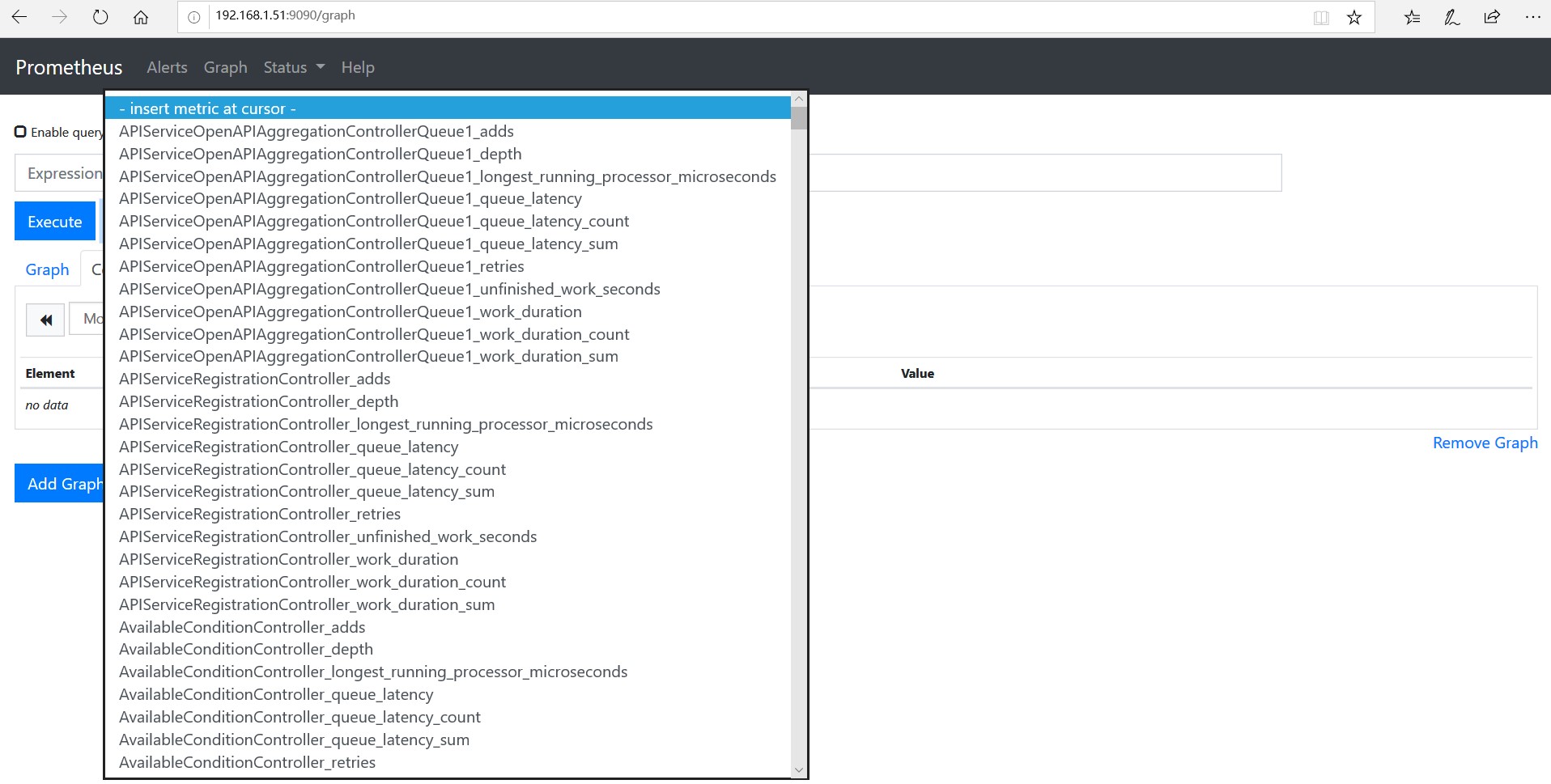

prometheus.yml中指定的job,相应target已经抓取到;可通过graph查询metrics

按照目前配置,prometheus、apiserver、容器指标数据都能获取到,但是主机及k8s相关资源指标数据还没有,还需要部署node-exporter及kube-state-metrics