- 博客/

一次请求超时问题排查记录

背景#

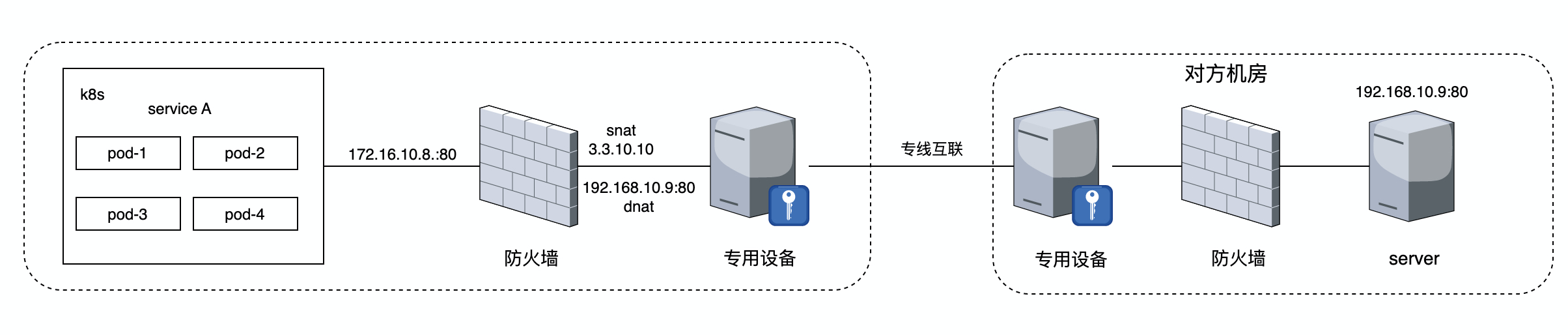

服务pod实例访问对方接口频繁超时,引起客诉。我们通过专线与对方机房互联,并且专线接入侧部署有专用物理设备(vpn加密通道),架构图如下

问题分析#

客户端请求抓包#

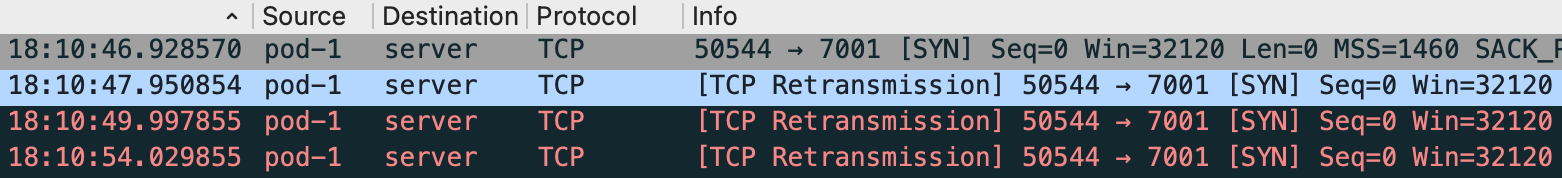

对服务pod实例抓包分析,发现超时的http请求均为发送syn包无响应导致tcp连接建立失败超时。同时专线网络监控比较稳,可以排除专线网络丢包的原因

对方服务器抓包#

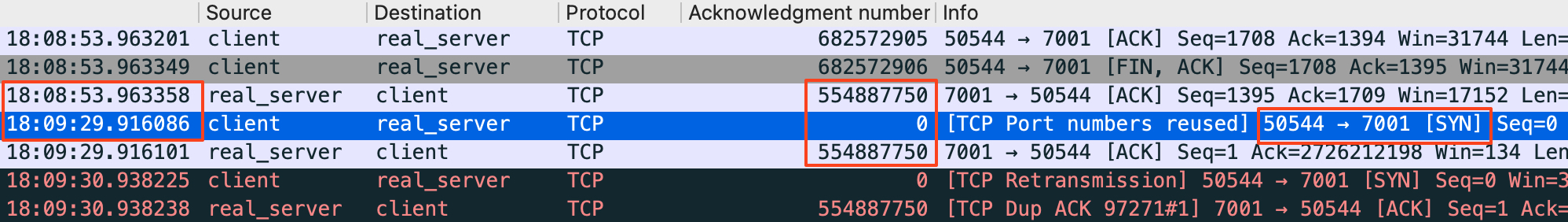

联系对方协助在server机器上抓包,分析对方提供的抓包文件:

- 客户端请求经过防火墙SNAT后,对方server看到的客户端IP都一样

- 服务器上收到了SYN包,但是回复的是ACK包,而不是SYN+ACK包

- 超时重传都发生在客户端请求源端口复用时(十几秒~九十几秒)

- client复用端口时,对比server发送的ACK包和相同五元组TCP连接断开时发送的最后一个ACK包,Acknowledgment number是相同的

- HTTP response header中包含

Connection: close,server只支持http1.0短连接,客户端请求完成后server主动断开TCP连接并进入TIME-WAIT状态

根据以上分析推断,对于新请求server端复用了之前的TCP连接,TCP连接处于TIME-WAIT状态

还有一个问题,server回复了ACK包,客户端为什么没有收到? 通过在专用设备上抓包分析,发现收到了ACK回包,推测是被防火墙drop。因为防火墙TCP连接TIME-WAIT状态timeout时间为1s,对于客户端新请求SYN包,会新建tcp session,收到server ACK回包后,认为非法就drop了

解决方案#

支持HTTP1.1长连接#

接口支持http1.1长连接,客户端请求完成后会主动断开TCP连接,TIME-WAIT状态维持在客户端。对方反馈无法支持

增加防火墙SNAT地址池#

当前业务的防火墙策略的SNAT IP Pool只有一个3.3.10.10,尝试再增加一个3.3.10.11,发现防火墙并没有轮询使用。查看官方文档,由于当前在用的IP Pool类型为overload,会根据客户端IP分配snat地址。比起巧合的是当前客户端四个pod ip 计算出来都会使用3.3.10.10。所以客户端pod IP随机分配,最终使用snat地址也是不确定的,考虑把SNAT IP Pool切换为 one-to-one类型

one-to-one模式是: 内部 IP 地址和外部(转换后的)IP 地址一对一匹配

配置一个28位的新地址池之后,超时请求率大幅降低。但是one-to-one IP pool并不是静态SNAT,所以每次客户端请求结束后,会释放snat IP到池子里,还是有一定几率超时。

如果要彻底解决,需要物理机或虚机部署固定IP,然后配置防火墙配置静态SNAT

内核源码看TIME_WAIT连接处理#

为什么会返回ACK? 从内核源码中找答案,内核版本3.10.0-1062

tcp_v4_rcv()#

tcp_v4_rcv函数负责接收和处理传入的TCP ipv4 数据包

int tcp_v4_rcv(struct sk_buff *skb)

{

const struct iphdr *iph;

const struct tcphdr *th;

struct sock *sk;

/* 中间省略 */

process:

// TCP连接处于TIMT_WAIT状态时,跳转到do_time_wait

if (sk->sk_state == TCP_TIME_WAIT)

goto do_time_wait;

/* 中间省略 */

do_time_wait:

/* 中间省略 */

// 根据tcp_timewait_state_process函数返回值,确定下一步动作

switch (tcp_timewait_state_process(inet_twsk(sk), skb, th)) {

// 合法SYN包,可以复用TCP连接,tcp状态可以切换至SYN_RECV

case TCP_TW_SYN: {

struct sock *sk2 = inet_lookup_listener(dev_net(skb->dev),

&tcp_hashinfo,

iph->saddr, th->source,

iph->daddr, th->dest,

inet_iif(skb));

if (sk2) {

inet_twsk_deschedule(inet_twsk(sk), &tcp_death_row);

inet_twsk_put(inet_twsk(sk));

sk = sk2;

goto process;

}

/* Fall through to ACK */

}

/* 发送ACK包

1.TCP_FIN_WAIT2状态收到FIN包,发送最后一次挥手的ACK包

2.TCP_TIME_WAIT状态下发送上一次的ACK包

*/

case TCP_TW_ACK:

tcp_v4_timewait_ack(sk, skb);

break;

// 发送RESET包

case TCP_TW_RST:

tcp_v4_send_reset(sk, skb);

inet_twsk_deschedule(inet_twsk(sk), &tcp_death_row);

inet_twsk_put(inet_twsk(sk));

goto discard_it;

// 直接丢弃,不做处理

case TCP_TW_SUCCESS:;

}

goto discard_it;

}

结合上面的场景,server处理流程应该是: 收到SYN包,tcp状态为TCP_TIME_WAIT,tcp_timewait_state_process()函数处理并返回了TCP_TW_ACK

tcp_timewait_state_process()#

tcp_timewait_state_process() 函数处理

tcp_timewait_state_process(struct inet_timewait_sock *tw, struct sk_buff *skb,

const struct tcphdr *th)

{

struct tcp_options_received tmp_opt;

struct tcp_timewait_sock *tcptw = tcp_twsk((struct sock *)tw);

bool paws_reject = false;

tmp_opt.saw_tstamp = 0;

// tcp header中开启了options且当前tcp连接开启了时间戳选项

if (th->doff > (sizeof(*th) >> 2) && tcptw->tw_ts_recent_stamp) {

tcp_parse_options(skb, &tmp_opt, 0, NULL);

if (tmp_opt.saw_tstamp) {

if (tmp_opt.rcv_tsecr)

tmp_opt.rcv_tsecr -= tcptw->tw_ts_offset;

tmp_opt.ts_recent = tcptw->tw_ts_recent;

tmp_opt.ts_recent_stamp = tcptw->tw_ts_recent_stamp;

/* 如果开启了TCP timestamp可选项,判断是否发生时间戳回绕

1. paws_reject = false 时间戳没有回绕

2. paws_reject = true 时间戳回绕 */

paws_reject = tcp_paws_reject(&tmp_opt, th->rst);

}

}

// tcp状态是FIN_WAIT2的处理逻辑

if (tw->tw_substate == TCP_FIN_WAIT2) {

/* 中间省略 */

}

// 时间戳没有回绕

if (!paws_reject &&

// 报文序列号 等于 预期接收的序列号

(TCP_SKB_CB(skb)->seq == tcptw->tw_rcv_nxt &&

/* TCP_SKB_CB(skb)->end_seq = (TCP_SKB_CB(skb)->seq + th->syn + th->fin +

skb->len - th->doff * 4);

没有携带数据报文,只有tcp header且没有syn/fin标志位,只能是reset或者ack

*/

(TCP_SKB_CB(skb)->seq == TCP_SKB_CB(skb)->end_seq || th->rst))) {

/* In window segment, it may be only reset or bare ack. */

if (th->rst) {

// net.ipv4.tcp_rfc1337内核参数等于0时

if (sysctl_tcp_rfc1337 == 0) {

kill:

//清理TIME_WAIT连接

inet_twsk_deschedule(tw, &tcp_death_row);

inet_twsk_put(tw);

return TCP_TW_SUCCESS;

}

} else {

// 重置TIME_WAIT状态timeout时间

inet_twsk_schedule(tw, &tcp_death_row, TCP_TIMEWAIT_LEN,

TCP_TIMEWAIT_LEN);

}

if (tmp_opt.saw_tstamp) {

tcptw->tw_ts_recent = tmp_opt.rcv_tsval;

tcptw->tw_ts_recent_stamp = get_seconds();

}

inet_twsk_put(tw);

return TCP_TW_SUCCESS;

}

// SYN包且没有rst/ack标志位,时间戳没有回绕

if (th->syn && !th->rst && !th->ack && !paws_reject &&

// 序列号没有回绕 或者

(after(TCP_SKB_CB(skb)->seq, tcptw->tw_rcv_nxt) ||

// 请求报文中开启timestamp可选项并且时间戳没有回绕

(tmp_opt.saw_tstamp &&

(s32)(tcptw->tw_ts_recent - tmp_opt.rcv_tsval) < 0))) {

u32 isn = tcptw->tw_snd_nxt + 65535 + 2;

if (isn == 0)

isn++;

TCP_SKB_CB(skb)->tcp_tw_isn = isn;

return TCP_TW_SYN;

}

if (paws_reject)

// 更新 /proc/net/netstat

NET_INC_STATS_BH(twsk_net(tw), LINUX_MIB_PAWSESTABREJECTED);

if (!th->rst) {

/* In this case we must reset the TIMEWAIT timer.

*

* If it is ACKless SYN it may be both old duplicate

* and new good SYN with random sequence number <rcv_nxt.

* Do not reschedule in the last case.

*/

if (paws_reject || th->ack)

// 重置TIME_WAIT状态timeout时间

inet_twsk_schedule(tw, &tcp_death_row, TCP_TIMEWAIT_LEN,

TCP_TIMEWAIT_LEN);

/*

if (*last_oow_ack_time) {

s32 elapsed = (s32)(tcp_time_stamp - *last_oow_ack_time);

// 计算当前的tcp timestamp(jiffies)和上次发送ack时的tcp timestamp 差值

// 如果小于内核参数net.ipv4.tcp_invalid_ratelimit 表示速率过快,需要限速

if (0 <= elapsed && elapsed < sysctl_tcp_invalid_ratelimit) {

// 更新 /proc/net/netstat

NET_INC_STATS(net, mib_idx);

return true;

}

}

如果dupacks没有限速 return TCP_TW_ACK,否则return TCP_TW_SUCCESS

*/

return tcp_timewait_check_oow_rate_limit(

tw, skb, LINUX_MIB_TCPACKSKIPPEDTIMEWAIT);

}

inet_twsk_put(tw);

return TCP_TW_SUCCESS;

}

th->doff 数据偏移字段,4-bit的无符号整型(数据范围0~15) 表示tcp header的长度,单位是32-bit(即4 bytes),范围5~15 tcp header(no options)长度为5,大小 5 * 32-bit (4 bytes) = 20 bytes

sizeof(*th) » 2 tcphdr结构体大小是20 bytes,20 » 2 = 20 / 4 = 5

时间戳回绕判断#

static inline bool tcp_paws_reject(const struct tcp_options_received *rx_opt,

int rst)

{

// tcp_paws_check()函数判断是否通过检查

if (tcp_paws_check(rx_opt, 0))

return false;

if (rst && get_seconds() >= rx_opt->ts_recent_stamp + TCP_PAWS_MSL)

return false;

return true;

}

static inline bool tcp_paws_check(const struct tcp_options_received *rx_opt,

int paws_win)

{

if ((s32)(rx_opt->ts_recent - rx_opt->rcv_tsval) <= paws_win)

return true;

if (unlikely(get_seconds() >= rx_opt->ts_recent_stamp + TCP_PAWS_24DAYS))

return true;

if (!rx_opt->ts_recent)

return true;

return false;

}

(s32)(rx_opt->ts_recent - rx_opt->rcv_tsval) <= 0 当前tcp连接server上一次发送的tcp报文中的TSecr和tcp报文选中的TSval的差值小于等于0,检查通过

- tcp timestamp是u32无符号整型,数据范围0~2^32-1

- s32是有符合整型,数据范围是-2^31~2^31-1

- u32无符号整型减法,如果|x|>y,则y-x = 2^32-|x-y|

- 对u32差值做强制类型转换的话,如果超过2^31就会溢出。所以rcv_tsval - ts_recent > 2^31时,时间戳回绕检查不会通过

tcp timestamp取值来源于jiffies,和服务器运行时长有关,记录的是从开机到现在时间戳时钟更新了多少次,rfc规定更新频率是1ms~1s,服务器重启后jiffies重置

Centos中查看内核时间戳时钟频率,1s/1000=1ms,那么2^31/1000/(3600*24)=24.8天后回绕

$ cat /boot/config-3.10.0-1062.el7.x86_64 |grep -w "CONFIG_HZ" CONFIG_HZ=1000get_seconds() >= rx_opt->ts_recent_stamp + TCP_PAWS_24DAYS

#define TCP_PAWS_24DAYS (60 * 60 * 24 * 24) ,单位是s

当前系统时间戳在tcp连接上一次收到rcv_tsval时的系统时间戳的24天之后的话,则时间戳回绕检查通过

时钟频率是1ms,那么rcv_tsval增加到溢出的时间是2^31/1000/(3600*24)=24.8天

所以增加了一个超过TCP_PAWS_24DAYS的判断,直接检查通过

序列号回绕判断#

对于SYN包,会比较tcp报文的序列号是否大于tcp连接记录的要预期接收的序列号

after(TCP_SKB_CB(skb)->seq, tcptw->tw_rcv_nxt)

// after函数定义

static inline bool before(__u32 seq1, __u32 seq2)

{

return (__s32)(seq1-seq2) < 0;

}

#define after(seq2, seq1) before(seq1, seq2)

(__s32)(seq1-seq2) 也是对u32差值强制类型转换,同样存在溢出情况。所以如果SYN包的seq比tcptw->tw_rcv_nxt大超过2^31,则认为序列号回绕,不能复用TIME_WAIT连接

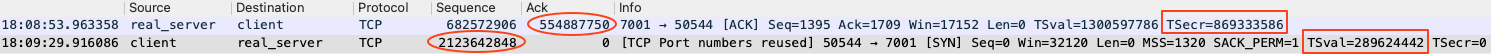

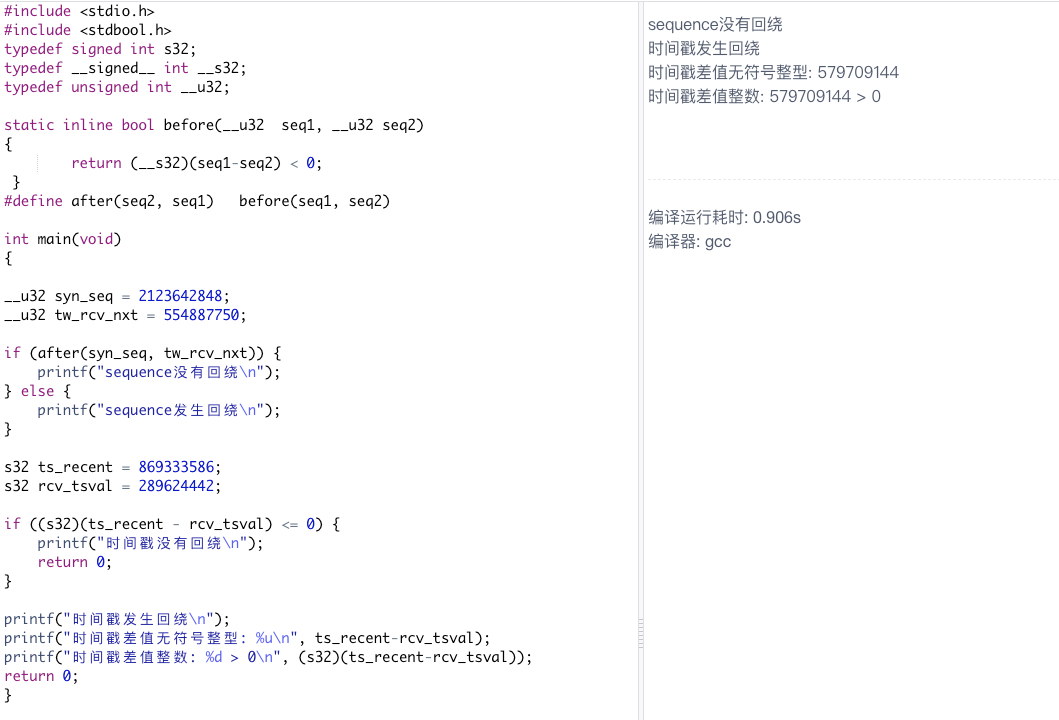

结合上文抓包分析,

seq=2123642848, ack=554887750

ts_recent = 869333586,rcv_tsval = 28962444

代码运行结果显示,时间戳发生回绕,所以paws_reject = true,返回了TCP_TW_ACK

问题场景模拟#

测试环境搭建#

服务器都是基于同一物理机上kvm虚拟化部署,内核版本 3.10.0-1062.el7.x86_64

client 请求 http://172.16.10.8:80 ,路由转发到firewall,filrewall服务器上使用iptables配置snat和dnat,再路由转发到gateway,gateway再转发到server

| role | device | ip | special route | system |

|---|---|---|---|---|

| client-1 | eth0 | 172.16.9.131 | 172.16.10.8 via 172.16.9.133 dev eth0 | centos 7.7 |

| client-2 | eth0 | 172.16.9.132 | 172.16.10.8 via 172.16.9.133 dev eth0 | centos 7.7 |

| firewall | eth0 | 172.16.9.133 | centos 7.7 | |

| eth1 | 192.168.102.3 | 192.168.10.9 via 192.168.102.2 dev eth1 | ||

| physical gw (专用设备) | eth0 | 192.168.102.2 | 3.3.10.10 via 192.168.102.3 dev eth0 | centos 7.7 |

| eth1 | 192.168.10.8 | |||

| server | eth0 | 192.168.10.9 | 3.3.10.10 via 192.168.10.8 dev eth0 | centos 7.7 |

firewall配置#

iptables配置snat和dnat

#dnat 收到访问172.16.10.8:80请求路由转发到192.168.10.9:80

$ iptables -t nat -A PREROUTING -d 172.16.10.8/32 -i eth0 -p tcp -m tcp --dport 80 -j DNAT --to-destination 192.168.10.9:80

#snat 访问192.168.10.9:80的请求,源地址转换为3.3.10.10

$ iptables -t nat -A POSTROUTING -d 192.168.10.9/32 -o eth1 -p tcp -m tcp --dport 80 -j SNAT --to-source 3.3.10.10

内核参数

$ sysctl -w net.ipv4.ip_forward=1

# 参考物理防火墙配置

$ systcl -w net.netfilter.nf_conntrack_tcp_timeout_time_wait=2

gateway配置#

专用设备是用于双方建立vpn加密通道,基于iptables对请求打标记、转发到vpn网卡。测试环境只加了conntrack记录

$ iptables -t nat -A PREROUTING -m conntrack --ctstate INVALID -j LOG --log-prefix "Conntrack INVALID: " --log-level 7

# conntrack日志记录文件

$ grep conntrack /etc/rsyslog.conf

kern.* /var/log/conntrack.log

$ sysctl -w net.ipv4.ip_forward=1

# 参照专用设备配置

$ systcl -w net.netfilter.nf_conntrack_tcp_timeout_time_wait=60

server配置#

# SimpleHTTPServer默认是HTTP/1.0

$ nohup python -m SimpleHTTPServer 80 &

问题复现#

client-1请求#

[root@node1] ~$ curl --local-port 54321 172.16.10.8:80

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 3.2 Final//EN"><html>

<title>Directory listing for /</title>

<body>

<h2>Directory listing for /</h2>

<hr>

<ul>

<li><a href="nohup.out">nohup.out</a>

<li><a href="test.file">test.file</a>

</ul>

<hr>

</body>

</html>

同时server抓包

[root@localhost] $ tcpdump -ieth0 port 80 -nnS

17:08:16.798635 IP 3.3.10.10.54321 > 192.168.10.9.80: Flags [S], seq 2851797271, win 29200, options [mss 1460,nop,nop,sackOK,nop,wscale 9], length 0

17:08:16.798704 IP 192.168.10.9.80 > 3.3.10.10.54321: Flags [S.], seq 912495348, ack 2851797272, win 29200, options [mss 1460,nop,nop,sackOK,nop,wscale 9], length 0

...省略...

17:08:16.806065 IP 3.3.10.10.54321 > 192.168.10.9.80: Flags [F.], seq 2851797347, ack 912495758, win 60, length 0

17:08:16.806073 IP 192.168.10.9.80 > 3.3.10.10.54321: Flags [.], ack 2851797348, win 58, length 0

client-2模拟请求#

server端的抓包记录可以看出,tcptw->tw_rcv_nxt = 2851797348。使用python scapy,发送一个seq小于2851797348的SYN包

[root@node2] /data0/fake$ python send_test.py 2851797340

[root@node2] /data0/fake$ cat send_test.py

#!/usr/bin/python

#-*- coding:utf-8 -*-

from scapy.all import *

import sys

if len(sys.argv) < 2:

print 'lack args'

sys.exit()

# 创建一个IP数据包,设置源IP、目标IP

ip_packet = IP(src="172.16.9.132", dst="172.16.10.8")

# 创建一个TCP数据包,设置源端口、目标端口和序列号

tcp_packet = TCP(sport=54321, dport=80,flags='S', seq=int(sys.argv[1]))

# 组装数据包

syn = ip_packet / tcp_packet

send(syn)

server端抓包记录可以看到,回复ACK包的ack seq还是2851797348,而不是SYN+ACK

17:08:24.023783 IP 3.3.10.10.54321 > 192.168.10.9.80: Flags [S], seq 2851797340, win 8192, length 0

17:08:24.023842 IP 192.168.10.9.80 > 3.3.10.10.54321: Flags [.], ack 2851797348, win 58, length 0

server端使用bpftrace脚本查看tcp_timewait_state_process()函数收到SYN包的内核处理流程

[root@localhost] $ bpftrace trace-time_wait.bt

Attaching 4 probes...

tcp_timewait_state_process:

3.3.10.10:54321 -> 192.168.10.9:80

[syn:1,ack:0,fin:0,rst:0], seq:1351613457, ack:0, win:32120, tw_rcv_nxt:1125813282

tw_substate: TCP_TIME_WAIT

tcp_tw_status: TCP_TW_SYN

################################

tcp sock处于TCP_TIME_WAIT状态,收到的SYN包seq=1351613457 > tw_rcv_nxt=1125813282,函数返回TCP_TW_SYN

问题延伸#

客户端发送SYN包后,收到ACK包会怎么处理?

查看内核源码,客户端发送SYN后,状态切换为SYN_SENT。收到tcp回包后的处理流程: tcp_v4_rcv -> tcp_v4_do_rcv -> tcp_rcv_state_process -> tcp_rcv_synsent_state_process

看一下tcp_rcv_synsent_state_process函数,如果收到回包设置了ACK标志位,判断该数据包seq和timestamp是否符合预期

(!after(TCP_SKB_CB(skb)->ack_seq, tp->snd_una)

snd_una是对应发送窗口记录的未被确认的seq号,如果ack_seq =< snd_una,返回reset

after(TCP_SKB_CB(skb)->ack_seq, tp->snd_nxt))

snd_nxt是对应发送窗口记录的下一次发送的seq号,如果ack_seq > snd_nxt,返回reset

检查通过后,如果数据包设置了RST标志位,直接丢弃,tcp状态切换为CLOSE。如果没有设置,继续检查是否设置了SYN标志位,如果没有,直接丢弃。

static int tcp_rcv_synsent_state_process(struct sock *sk, struct sk_buff *skb,

const struct tcphdr *th, unsigned int len)

{

struct inet_connection_sock *icsk = inet_csk(sk);

struct tcp_sock *tp = tcp_sk(sk);

struct tcp_fastopen_cookie foc = { .len = -1 };

int saved_clamp = tp->rx_opt.mss_clamp;

tcp_parse_options(skb, &tp->rx_opt, 0, &foc);

if (tp->rx_opt.saw_tstamp && tp->rx_opt.rcv_tsecr)

tp->rx_opt.rcv_tsecr -= tp->tsoffset;

if (th->ack) {

/* rfc793:

* "If the state is SYN-SENT then

* first check the ACK bit

* If the ACK bit is set

* If SEG.ACK =< ISS, or SEG.ACK > SND.NXT, send

* a reset (unless the RST bit is set, if so drop

* the segment and return)"

*/

if (!after(TCP_SKB_CB(skb)->ack_seq, tp->snd_una) ||

after(TCP_SKB_CB(skb)->ack_seq, tp->snd_nxt))

goto reset_and_undo;

if (tp->rx_opt.saw_tstamp && tp->rx_opt.rcv_tsecr &&

!between(tp->rx_opt.rcv_tsecr, tp->retrans_stamp,

tcp_time_stamp)) {

NET_INC_STATS_BH(sock_net(sk), LINUX_MIB_PAWSACTIVEREJECTED);

goto reset_and_undo;

}

/* Now ACK is acceptable.

*

* "If the RST bit is set

* If the ACK was acceptable then signal the user "error:

* connection reset", drop the segment, enter CLOSED state,

* delete TCB, and return."

*/

if (th->rst) {

tcp_reset(sk);

goto discard;

}

/* rfc793:

* "fifth, if neither of the SYN or RST bits is set then

* drop the segment and return."

*

* See note below!

* --ANK(990513)

*/

if (!th->syn)

goto discard_and_undo;

...省略...

构造请求#

可以借助 Scapy_Tcpsession指定tcp sequence发送http请求,来复现

- client-1 发送tcp seqence为1的http请求

[root@nod[root@node1] /data0$ python scapy_http.py 1

[root@nod[root@node1] /data0$ cat scapy_http.py

#!/usr/bin/python

#-*- coding:utf-8 -*-

from Scapy_Tcpsession import TcpSession

import sys

if len(sys.argv) < 2:

print 'lack args'

sys.exit()

sess = TcpSession(('172.16.10.8',80,int(sys.argv[1]),54321))

sess.connect()

http_get=(

"GET / HTTP/1.0\r\n"

"Host: 172.16.10.8:80\r\n"

"User-Agent: Python Scapy\r\n"

"Connection: close\r\n\r\n"

)

sess.send(http_get)

- client-2 请求172.16.10.8:80发现返回正常

[root@node2] ~$ curl --local-port 54321 172.16.10.8:80

、<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 3.2 Final//EN"><html>

<title>Directory listing for /</title>

<body>

<h2>Directory listing for /</h2>

<hr>

<ul>

<li><a href="nohup.out">nohup.out</a>

<li><a href="test.file">test.file</a>

</ul>

<hr>

</body>

</html>

bpftrace跟踪内核处理过程#

使用bpftrace工具观察下client-2 收到ACK包的处理流程

[root@node2] /data0/kernel$ bpftrace synsent.bt

#!/usr/bin/env bpftrace

#include <linux/tcp.h>

#include <net/tcp.h>

BEGIN

{

@tcp_sk_status[2] = "TCP_SYN_SENT";

@tcp_sk_status[9] = "TCP_LAST_ACK";

}

kprobe:tcp_rcv_state_process

{

$sk=(struct sock *)arg0;

$tp=(struct tcp_sock *)$sk;

$skb = (struct sk_buff *)arg1;

$iphd = ((struct iphdr *)($skb->head + $skb->network_header));

$th = ((struct tcphdr *)($skb->head + $skb->transport_header));

$dport = $th->dest;

$dport = ($dport >> 8) | (($dport << 8) & 0x00FF00);

if ($dport == 54321) {

$ack = $th->ack_seq;

$ack = ($ack >> 24) | (($ack & 0x00FF0000) >> 8) | (( $ack & 0x00FF00) << 8) | (($ack & 0xFF) << 24);

$skc_state=$sk->__sk_common.skc_state;

printf("%s:\ntcp_sk_status: %s, syn:%u,ack:%u,fin:%u,ack_seq:%u, snd_una:%u, snd_nxt:%u\n",func,@tcp_sk_status[$skc_state],$th->syn,$th->ack,$th->fin,$ack,$tp->snd_una,$tp->snd_nxt);

$una = $tp->snd_una - $ack;

$int_una = (int32) $una;

// (!after(TCP_SKB_CB(skb)->ack_seq, tp->snd_una)

if (!((int32)($tp->snd_una - $ack) < 0)) {

printf("%d, SEG.ACK =< ISS\n",$int_una);

}

$nxt = $tp->snd_nxt - $ack;

$int_nxt = (int32) $nxt;

// after(TCP_SKB_CB(skb)->ack_seq, tp->snd_nxt))

if ((int32)($tp->snd_nxt - $ack) < 0) {

printf("%d, SEG.ACK > SND.NXT\n",$int_nxt);

}

}

}

END

{

clear(@tcp_sk_status);

}

[root@node2] /data0/kernel$ bpftrace synsent.bt

tcp_rcv_state_process:

tcp_sk_status: TCP_SYN_SENT, syn:0,ack:1,fin:0,ack_seq:88, snd_una:2387012236, snd_nxt:2387012237

-1907955147, SEG.ACK > SND.NXT

tcp_rcv_state_process:

tcp_sk_status: TCP_SYN_SENT, syn:1,ack:1,fin:0,ack_seq:2387012237, snd_una:2387012236, snd_nxt:2387012237

tcp_rcv_state_process:

tcp_sk_status: TCP_LAST_ACK, syn:0,ack:1,fin:0,ack_seq:2387012313, snd_una:2387012312, snd_nxt:2387012313

可以看到,client-2收到server的ACK回包后

- 内核tcp_rcv_state_process函数判断 ack_seq = 88 > snd_una = 2387012236 (u32差值强制int32转换,相差2^31溢出导致),发送server reset数据包

- server端收到reset包后,清理tcp TIME_WAIT连接

- client-2重发syn包,server端返回syn+ack,握手成功

同样使用bpftrace工具观察server的处理过程,

[root@localhost] $ cat trace-time_wait.bt

#!/usr/bin/env bpftrace

#include <linux/tcp.h>

#include <net/tcp.h>

#include <net/inet_timewait_sock.h>

BEGIN

{

@tw_substate[5] = "TCP_FIN_WAIT2";

@tw_substate[6] = "TCP_TIME_WAIT";

@tcp_tw_status[0] = "TCP_TW_SUCCESS";

@tcp_tw_status[2] = "TCP_TW_ACK";

@tcp_tw_status[3] = "TCP_TW_SYN";

}

kprobe:tcp_timewait_state_process

{

$inet = (struct inet_timewait_sock *)arg0;

$tw = (struct tcp_timewait_sock *)$inet;

$skb = (struct sk_buff *)arg1;

$th = (struct tcphdr *)arg2;

$iphd = ((struct iphdr *)($skb->head + $skb->network_header));

if ($iphd->protocol == IPPROTO_TCP) {

$srcaddr = $iphd->saddr;

$dstaddr = $iphd->daddr;

$srcip = ntop($iphd->saddr);

$dstip = ntop($iphd->daddr);

// $th = ((struct tcphdr *)($skb->head + $skb->transport_header));

$dport = $th->dest;

$dport = ($dport >> 8) | (($dport << 8) & 0x00FF00);

if ($dport == 80) {

$sport = $th->source;

$sport = ($sport >> 8) | (($sport << 8) & 0x00FF00);

// $st = *$th;

$seq = $th->seq;

$seq = ($seq >> 24) | (($seq & 0x00FF0000) >> 8) | (( $seq & 0x00FF00) << 8) | (($seq & 0xFF) << 24);

$ack = $th->ack_seq;

$ack = ($ack >> 24) | (($ack & 0x00FF0000) >> 8) | (( $ack & 0x00FF00) << 8) | (($ack & 0xFF) << 24);

$win = $th->window;

$win = ($win >> 8) | (($win << 8) & 0x00FF00);

$tw_rcv_nxt = $tw->tw_rcv_nxt;

printf("%s:\n",func);

printf("%s:%d -> %s:%d\n",$srcip, $sport, $dstip, $dport);

/*

TCP_FIN_WAIT2状态收到fin包:

tcptw->tw_rcv_nxt = TCP_SKB_CB(skb)->end_seq; 回复对方的ack包中 ack=tw_rcv_nxt=seq+1

TCP_SKB_CB(skb)->end_seq = (TCP_SKB_CB(skb)->seq + th->syn + th->fin + skb->len - th->doff * 4);

*/

printf("[syn:%d,ack:%d,fin:%d,rst:%d], seq:%-u, ack:%u, win:%u, tw_rcv_nxt:%u\n",$th->syn, $th->ack, $th->fin, $th->rst, $seq, $ack, $win, $tw_rcv_nxt);

// printf("%d compare %d\n",$th->doff,sizeof($st)>>2);

$state = $inet->tw_substate;

$statestr = @tw_substate[$state];

printf("tw_substate: %s\n",$statestr);

}

}

}

kretprobe:tcp_timewait_state_process

{

$ret = retval;

$statestr = @tcp_tw_status[$ret];

printf("tcp_tw_status: %s\n",$statestr);

print("################################");

}

END

{

clear(@tw_substate);

clear(@tcp_tw_status);

}

[root@localhost] $ bpftrace trace-time_wait.bt

tcp_timewait_state_process:

3.3.10.10:54321 -> 192.168.10.9:80

[syn:1,ack:0,fin:0,rst:0], seq:2387012236, ack:0, win:32120, tw_rcv_nxt:88

tw_substate: TCP_TIME_WAIT

tcp_tw_status: TCP_TW_ACK

################################

tcp_timewait_state_process:

3.3.10.10:54321 -> 192.168.10.9:80

[syn:0,ack:0,fin:0,rst:1], seq:88, ack:0, win:0, tw_rcv_nxt:88

tw_substate: TCP_TIME_WAIT

tcp_tw_status: TCP_TW_SUCCESS

################################

可以看到,server收到SYN包后

- tcp_timewait_state_process函数判断sequence回绕(seq < tw_rcv_nxt),返回给client-2 ACK包

- 收到client-2发送的reset包,直接清理tcp TIME_WAIT连接

测试环境搭建后发现server端TIME_WAIT状态持续时间为60s,这是因为内核版本的差异。参考这篇文章 从 Linux 源码看 TIME_WAIT 状态的持续时间